PSHA

Motivation & Objectives

WP5 is dedicated to developing and implementing new and more realistic approaches for PSHA computations. The work program of this WP gathers together a suite of research actions related to testing and updating PSHA results against observations, and to developing new methods to increase the robustness of source and recurrence models.

Selected topics of research include:

- non-Poissonian recurrence models

- alternative formulations for the maximum magnitude

- non-ergodic GMPEs and associated implementation into PSHA software

- analytical propagation of epistemic uncertainties on GMPEs

- hazard disaggregation

- vector hazard

- PSHA testing and model evalutation based on observations (fragile geological features, e.g. precarious rocks, instrumental records and/or historical macroseismic observations)

Coordination

Norm ABRAHAMSON (PG&E) & Irmela ZENTNER (EDF)

Actions

Non-poisson recurrence models for zones

Motivations

Non-Poisson models (renewal models) based on the time since the last earthquake are used for faults. There may be time-dependent rates for zones as well. Depending the on spatial correlation lengths of non-Poisson behavior for zones, this can be a large contributor to the epistemic uncertainty in the hazard. Need to develop the correlation lengths for non-Poisson behavior for zones.

Program of research

Use California seismicity data to evaluate non-Poisson behavior for off-fault seismicity. Develop models for the range scale factors on the Poisson rates for zones.

Organization

Funding member: PG&E

Type:

Collaboration: University of California, Berkeley/PEER

Status: in progress

Results

Report

Constrain seismicity parameters from the comparison of the theoretical 3-D deformation tensor with geological

Motivations

The principal task of this action is to analyse the consistency of the seismicity parameters G-R and Mmax.

Program of research

Strain tensors are computed for every branch of the logic tree and are then compared with the strain rate estimates. Expert evaluation on acceptable strain rate values for mainland France is given, and the impact on PSHA computation results is evaluated.

Organization

Funding member: Orano

Type: contract

Collaboration: Seister, ENS

Status: in progress

Results

Report

Extreme events : assess Mmax from a statistical perspective

Report available!![]() “Bayesian estimation of the maximum magnitude Mmax based on the statistics of extremes” by I. Zentner.

“Bayesian estimation of the maximum magnitude Mmax based on the statistics of extremes” by I. Zentner.

Motivations

Probabilistic Seismic Hazard Assessment (PSHA) has the goal to evaluate annual frequencies of exceeding a given ground motion Intensity Measure such as PGA (Peak Ground Acceleration), PSA (Pseudo Spectral Acceleration) etc. For this purpose, it is necessary to describe occurrence rates of earthquakes and the distribution of their magnitudes. The most popular distribution of magnitudes is the exponential distribution from the Gutenberg-Richter (GR) law. Numerous studies and applications showed that the GR distribution is pertinent to model the distribution of magnitudes in the lower and moderate magnitudes ranges. However, it deviates from the log-linear model in the higher frequency range. For this reason and to account for finite energy of faults, the GR distribution is generally truncated at a maximum possible magnitude value

Program of research

This report addresses the estimation of the maximum magnitude in the truncated GR law by means of a Bayesian approach involving extreme value statistics. The Bayesian updating approach allows for the combination of different sources of information and to overcome the bias of the simple maximum likelihood estimator. The development of the prior distribution of maximum magnitude relies on drawinganalogies to tectonically comparable regions to increase the dataset for the development of generic distribution that can be updated for particular configurations. This is achieved by the likelihood function. When Poissonian occurrence of earthquakes is assumed, then the maximum magnitude mmax as well as its probability distribution (linked to epistemic uncertainty) can be estimated by deriving the extreme value distribution analytically. We propose a new method to construct the likelihood function based onthe distribution of extremes of the truncated GR law. The proposed method constitutes an improvement of former developments by EPRI, see Johnston (1994), and further promoted by USNRC (2012) where the Bayesian updating approach is used to account for prior information from similar tectonic zones and expert judgment. In the proposed method, only the completeness period of mmaxobs is required, so that there is no need to determine and use the exact completeness periods for magnitude bins of smaller events and to introduce the associated uncertainties. This makes the approach easy to implement and to apply.The proposed method is more rigorous and outperforms the EPRI/USNRC Bayesian updating approach in terms of precision. As for the EPRI method, the approach allows addressing the case where mmaxobsis outside its completeness interval.The analyses conducted in this report with simulated catalogues demonstrated the capability of the Bayesian updating approach to correctly estimate mmax for periods of observation available in France. It is acknowledged here that the analytical expressions of extreme value distributions can be derived as long as Poisson occurrence is assumed (the magnitude distribution can be the GR law or any other).

Organization

Funding member: EDF

Type: in-kind

Collaboration: EDF

Status: completed

Figure source: PEGASOS

Fault –specific PSHA in low-activity regions

Motivations

Assess what precision/level of knowledge related to source parameters is necessary such that a fault-based modelling performs better than area sources in PSHA

Program of research

Define and propagate uncertainties & asses impact of uncertainty and predictability of specific fault models vs diffuse seismicity models: synthetic fault model + simple PSHA

Comment: PG&E is using simplified factors to correct for point sources versus faults > PEER PSHA code comparisons show this works well

Organization

Funding member: EDF

Type: in-kind

Collaboration: EDF

Status: not started

Results

Report

Non-ergodic GMPEs in PSHA

Uncertainties in ground motion models, effect of nonergodic models and propagation of epistemic uncertainty in PSHA

Reports available!

![]() "Uncertainties in GMPEs, Effect of Non- Ergodic Models" by N. Abrahamson (2.49 Mo)

"Uncertainties in GMPEs, Effect of Non- Ergodic Models" by N. Abrahamson (2.49 Mo)

![]() "Efficient Calculation of PSHA with Epistemic Uncertainty in the Ground-Motion Model" by M. Lacour & N. Abrahamson (8.96 Mo)

"Efficient Calculation of PSHA with Epistemic Uncertainty in the Ground-Motion Model" by M. Lacour & N. Abrahamson (8.96 Mo)

Motivations

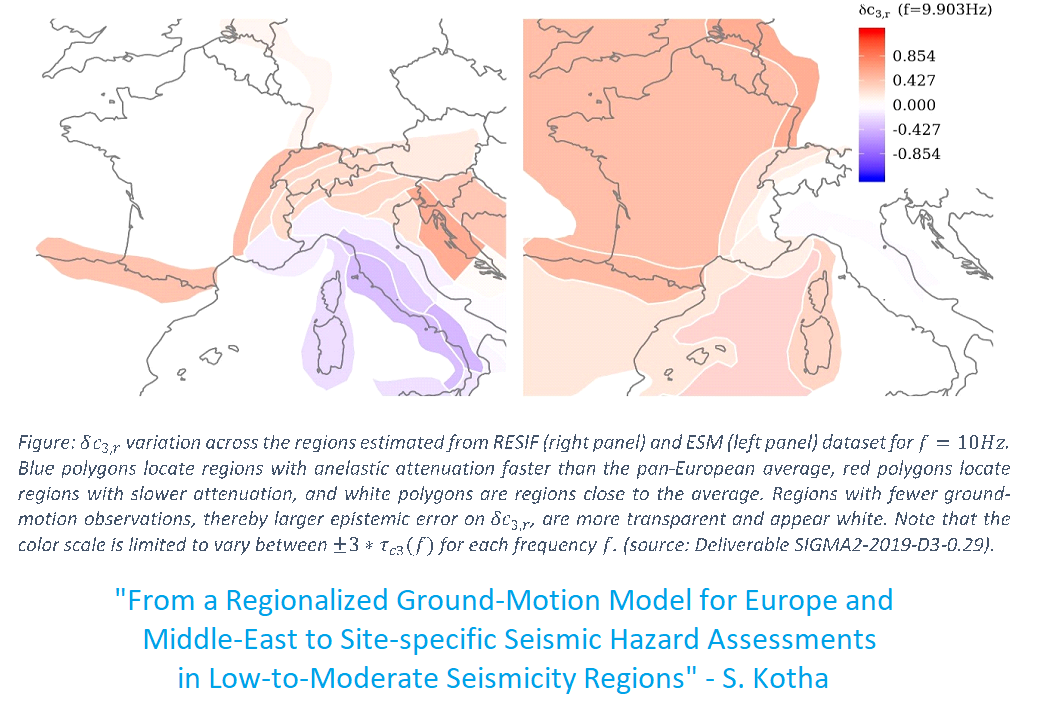

PSHA practice is moving toward using non-ergodic Ground Motion Models (GMMs) in which the median ground motion for a given magnitude and distance varies as a function of the location of the earthquake and the location of the site. One major motivation for such a regionalization is the observation that about 60% of the aleatory variance in ergodic GMPEs is due to systematic effects (source, path, site). The non ergodic GMM is based on the variable Coefficient Model (VCM) by Landwehr et al (2016) where spatially varying coefficients are estimated and introduces path-dependent attenuation according to Abrahamson et al (2017) by adding large distance (Q) nonergodic term, and Kuehn (2019)

Program of research

For the non-ergodic approach, use of a small number of alternative GMMs in the logic tree will not adequately capture the effects of the epistemic uncertainty in the location-specific source and path effects in the non-ergodic GMMs. Instead, hundreds of alternative GMMs will be needed to capture the epistemic uncertainty of the nonergodic path terms from different ray paths through the crust. Using a large number of branches on the logic tree leads to a large increase in the computational time required for the PSHA. To make the fully non-ergodic GMM method practical for PSHA applications, an efficient method to propagate the epistemic uncertainty in median ground motion is needed to capture the range of source and path effects for each source and site location. Rather than requiring faster and faster computers, we can dramatically speed up the calculations using the Polynomial Chaos (PC) approximation for propagating the effect of the epistemic uncertainty in the hazard due to the epistemic uncertainty in the median ground motion. Using the PC method, more accurate fractiles can be estimated in less time as compared to the traditional approach using logic trees with discrete sampling of the distribution for the median GMM.

1) Development of non ergodic GMPE for California

2) Development of the PC chaos approach for the propagation of epistemic uncertainties on PSHA

3) Implementation in HAZ45 software

Organization

Funding member: PG&E

Type: contract

Collaboration: PEER/University of California - Berkeley

Status: completed

Results

Report

Non ergodic GMPE in French PSHA

Motivations

This task addresses the development of a non-ergodic GMPE, its implementation in French PSHA, and the computation of non-ergodic hazard calculations for sites in France. Currently, GFZ is developing non-ergodic GMPEs for Europe following the Landwehr et al (2016) methodology which was first applied to California. The focus of the GFZ study will be on the data rich regions in Europe such as Italy. An initial non-ergodic GMPE for France is developed here for use in the trial hazard calculations. The non-ergodic models require capturing the epistemic uncertainties in all of the coefficients, leading to a large number of branches to captures all of the different path effects for different source-site combinations. As a consequence, the GMPE logic trees approach is not computationally practical for high-end PSHA calculations and an alternative approach is needed for more efficient propagation of epistemic uncertainties. This has been addressed by Lacour & Abrahamson (2019) by using the polynomial chaos expansion to replace the PSHA model by a simpler (meta-) model. For France, the main source of ground-motion data will from small magnitude earthquakes. The path terms in nonergodic GMPEs are from linear path effects in the crust. Because response spectra scaling depends on the spectral shape, use of response spectra from small magnitude earthquakes to constrain the non-ergodic terms in the GMPE may results in GMPEs that cannot be reliably extrapolated to large earthquakes. Rather than using response spectral values, models for the FAS in France will be developed using data from small earthquakes to capture the linear source, path, and site effects. The non-ergodic FAS adjustment factors can be converted to response spectral adjustment factors for larger magnitude earthquakes using RVT with the appropriate FAS frequency content. The nonergodic GMPE and the new capabilities to propagate epistemic uncertainties by polynomial chaos expansion will be implemented in Openquake software by GEM.

Program of research

1. Development of a non-ergodic GMPE adjustment terms for France (5 Hz and 1 Hz)

2. Implementation in Openquake

3. Conduct a trail PHSA calculations for three sites in France

4. PHSA code comparison (Openquake and HAZ45) for non-ergodic hazard

Organization

Funding member: EDF

Type: post-doc

Collaboration: PEER/UCB, GEM, EDF

Status: in progress

Results

Report

Improved disaggregation for selecting a representative design scenario

Motivations

The standard approach for selecting a single representative scenario from PSHA is to use either both modal magnitude and the distance from the probability mass function of the joint M-R distribution, or to use the mean magnitude and mean distance from the marginal distributions of magnitude and distance. Both the mean values and modal values have limitations. The objective of this task is to developed a methodology for selecting a representative scenario from the disaggregation that has three features: (1) it is a physically realistic scenario (i.e. it does not lead to a scenario between two modes), (2) there is not a strong sensitivity to bin size, and (3) it is not sensitive to how the source are subdivided. The first feature addresses the shortcoming of using the mean values for all sources. The second feature addresses the shortcoming of using the mode. The third feature is not an issue with current practice using the mean or the mode, but it can become an issue using alternative methods.

Program of research

The proposed approach is to compute the mean M and R separately for each seismic source. The reason for selecting this approach is that, for fault sources and for areal source based on uniform zones, the disaggregation for each source will not be bimodal, so the mean will provide a physically realistic scenario. It is also avoids the sensitivity to the bin size that affects the mode. A key advantage of the using the mean values by source is that the method can be applied to estimate all of the source and distance parameters that are inputs to the ground-motion models and are needed to compute the resulting ground motion for the selected scenario. The proposed approach has been implemented in the HAZ45 PSHA program.

There is not a single approach for disaggregation that is the best in all applications, but the mean values by source are often better representative scenarios than either the modal M-R or the mean M and R for all sources.

Organization

Funding member: PG&E, University of California - Berkeley

Type: in-kind

Collaboration: PEER/University of California - Berkeley

Status: completed

Results

Report

Vector hazard

Organization

Funding member: PG&E, EDF

Type: in-kind

Collaboration: PEER/University of California - Berkeley

Status: in progress

Results

Report

PSHA testing and model evaluation

Topics:

- Comparison between French and German PSHA Report available

- PSHA testing based on observed risk Report available

- Testing Site-Specific Hazard for the Diablo Canyon Power Plant Using Precarious Rocks - Report available

- Evaluation of SERA seismic hazard assessment using Bayesian approaches Report available

Comparison between French and German PSHA

Report available ![]() PSHA for France into OpenQuake: Comparisons and Modelling Issues (9.83 Mo)

PSHA for France into OpenQuake: Comparisons and Modelling Issues (9.83 Mo)

Motivations

This project consists in comparing the seismic hazards predicted by the EDF model and the German national seismic hazard model (Gru?nthal et al., 2018; hereafter referred to as the "GFZ model") at the France-Germany border. This region is covered by both models, meaning that two independent teams of experts have produced the seismic hazard estimates for the same region. The difference between the two hazard estimates will help us to understand the source of uncertainty of a PSHA. Regional PSHA projects like, e.g. SHARE (Woessner et al., 2015) often face the problem of harmonization across national boundary due to the inconsistency between underlying models. This part of the study could also be considered as an investigation of the harmonization problem.

Program of research

The model comparisons are based on the hazard estimates for PGA and spectral accelerations at 5 and 1 Hz. The two hazard levels used are 10% and 2% in 50 years. The full logic trees are used as hazard estimates, meaning that the hazard is fully probabilistic. Because both of the two models use a common GMPE, logic tree branches of that GMPE are isolated for separate compairsons, which highlights the model difference due to the seismicity models of the two PSHA models.

Four aspects of the ensemble model (based on logic tree) are used for model comparison: the mean hazard, the inter-quantile range of the hazard estimates which acts as a proxy of the degree of epistemic uncertainty, visual comparison between the density functions of the ensemble model, and an overlapping index that summarises the difference between two density functions into one numeric metric.

Organization

Funding member: EDF

Type: Post-doc (S. Mak)

Collabration: GFZ, Potsdam

Status: Ended

Results

Report

PSHA testing based on observed risk

Report available ![]() Psha testing on observed risk (5.05 Mo)

Psha testing on observed risk (5.05 Mo)

Motivations

Probabilistic seismic hazard analysis (PSHA) has become a fundamental tool in assessing seismic hazards and seismic input motions. It is used for both site-specific evaluation in case of critical facilities and at national or regional scale for building codes.

PSHA outcomes are expected to be affected by uncertainty due to imperfect knowledge of physical processes that generate the seismic ground shaking (faulting, seismic wave propagation, etc.), This uncertainty (being large or small) is an important component of PSHA outcomes and implies that several possible situations (seismic scenarios) may occur during the expected operational period (TecDoc IAEA in progress). Considering this high uncertainty in Probabilistic Seismic Hazard Assessments (PSHA) and the importance of PSHA for the seismic design, it is largely pertinent to focus on the issue of consistency-checking of the PSHA outcomes.

In the last decade, several approaches for testing PSHA results have been published. Several recent opinion papers encourage hazard analysis to carry out tests (Stein 2011, Stirling 2012) and several applications have been made in different countries (France, Italy, New Zealand, USA, Mexico, etc) (Stirling et al. 2006, Brune et al. 2002, Rey et al. 2018). For evaluating PSHA outcomes against observations, most researches use accelerations recorded at instrumented sites. However, stable continental regions with low deformation rates and low seismicity have not sufficient seismic activity (the level of accelerations is too low) or the period of observations is too short for testing PSHA outcomes. For these regions, the use of macroseismic data is of primary interest when dealing with seismic hazard analysis and particularly for testing the consistency of seismic hazard estimates with observations.

However, despite this interest, researches on the subject are not too much developed. The objective of the present research is to apply a method based on macroseismic data (Labbé 2008, 2010, 2017), so as to evaluate the consistency with historical seismicity of three hazard maps of the French metropolitan territory (established in 2002, 2012 and 2017), which reveal a dramatic lack of consensus on the subject.

Program of research

The method does not consist of directly comparing accelerations with intensities, but of calculating the seismic risk by two different approaches presented in section 2. The seismic risk is defined as the annual probability that a building of a given vulnerability class experiences a given damage grade, where the vulnerability class and the damage grade are defined in the EMS-98 scale (Grünthal 1998).

The methodology applied in this research consists in calculating the seismic risk using two different approaches (Labbé 2008, 2010, 2017):

- Seismic risk 1, derived from historical seismicity.

- Seismic risk 2, calculated by convolution of hazard maps and fragility curves.

The seismic risk derived from historical seismicity (macroseismic observations) is considered as the “reference” for comparing with the seismic risk obtained by convolution.

Organization

Funding member: EDF

Type: internship, contract (K. Drif, P. Labbé)

Collaboration: EDF R&D, P. Labbé

Status: Ended

Results

Report

Testing Site-Specific Hazard for the Diablo Canyon Power Plant Using Precarious Rocks

Report available! ![]() "Testing Site-Specific Hazard for the Diablo Canyon Power Plant Using Precarious Rocks" by N. Abrahmson (3.25 Mo)

"Testing Site-Specific Hazard for the Diablo Canyon Power Plant Using Precarious Rocks" by N. Abrahmson (3.25 Mo)

Motivations

Testing of hazard curves at a specific site requires a sufficiently long observation period. One way to get long observation periods is to use existence of fragile geologic features (FGS) to test the hazard. This requires developing fragility curves and ages for the FGS which can then be combined with the site-specific hazard curves to compute the probability that the FGS would not have failed during its lifetime.

Program of research

As part of the DOE/PG&E extreme ground motion project (Hanks, 2013), Baker et al (2013) developed a methodology for testing site-specific hazard curves based on the existence of FGS at the site. Fragile geologic features near the Diablo Canyon Power Plant (DCPP) include precarious rocks. As part of the DCPP Long-Term Seismic Program (LTSP), data collection studies by Stirling and Rood in 2016 and 2017 were funded to characterize the fragilities and ages of precarious rocks near DCPP. This report describes the application of the Baker et al (2013) methodology to three precarious rocks near DCPP based on the fragility and age characterization given in Caklais (2017) and in Stirling et al (2017).

The Caklais (2017) master's thesis is given in appendix 1. It includes detailed descriptions of the precarious rocks, the cosmogenic age dating to determine the how long the rocks have been in their precarious geometry, the measure of the geometries of the rocks, and the development of fragility models for each rock. In this trial application, we used the fragility models and the ages of the precarious rocks given in Caklais (2017) with site-specific hazard curves for the precarious rocks. The Caklais (2017) report also includes comparisons with the hazard curves but they are not based on the updated hazard for the precarious rock sites and should not be used.

Organization

Funding member: PG&E

Type: contract

Collaboration:

Status: Ended

Results

Report

Evaluation of SERA seismic hazard assessment using Bayesian approaches

Report available!![]() Evaluation of SERA seismic hazard assessment results using Bayesian approaches (7.59 Mo)

Evaluation of SERA seismic hazard assessment results using Bayesian approaches (7.59 Mo)

Motivations

The main objective of this study is the evaluation of the seismic hazard assessment performed in the framework of the SERA project. More specifically, comparison between observations and predictions of the SERA seismic hazard assessment will be performed.

During the last decade, new methodologies to evaluate probabilistic seismic hazard analysis (PSHA) results appeared, mainly in France and Italy, in particular, in the framework of the SIGMA project. Two published methodologies (Viallet et al, 2017 and Secanell et al, 2018) are based on a Bayesian approach and present many similitudes.

Program of research

The study aims at the evaluation of the SERA seismic hazard assessment results using mainly the Viallet et al. (2017) method. A second objective of the project is the improvement of an existing PSHA testing Python software, so as make it become a more user-friendly tool which could be distributed to the community. This program will also be checked (V & V process).

Organization

Funding member: Orano, EDF

Type: contract (S. Drouet, R. Secanell)

Collaboration: Fugro, France

Status: on-going

Results

Report + software